Scientific Journal Publishes AI-Generated Rat with Gigantic Penis In Worrying Incident

February 15, 2024A peer-reviewed science journal published a paper this week filled with nonsensical AI-generated images, which featured garbled text and a wildly incorrect diagram of a rat penis. The episode is the latest example of how generative AI is making its way into academia with concerning effects.

The paper, titled “Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathway” was published on Wednesday in the open access Frontiers in Cell Development and Biology journal by researchers from Hong Hui Hospital and Jiaotong University in China. The paper itself is unlikely to be interesting to most people without a specific interest in the stem cells of small mammals, but the figures published with the article are another story entirely.

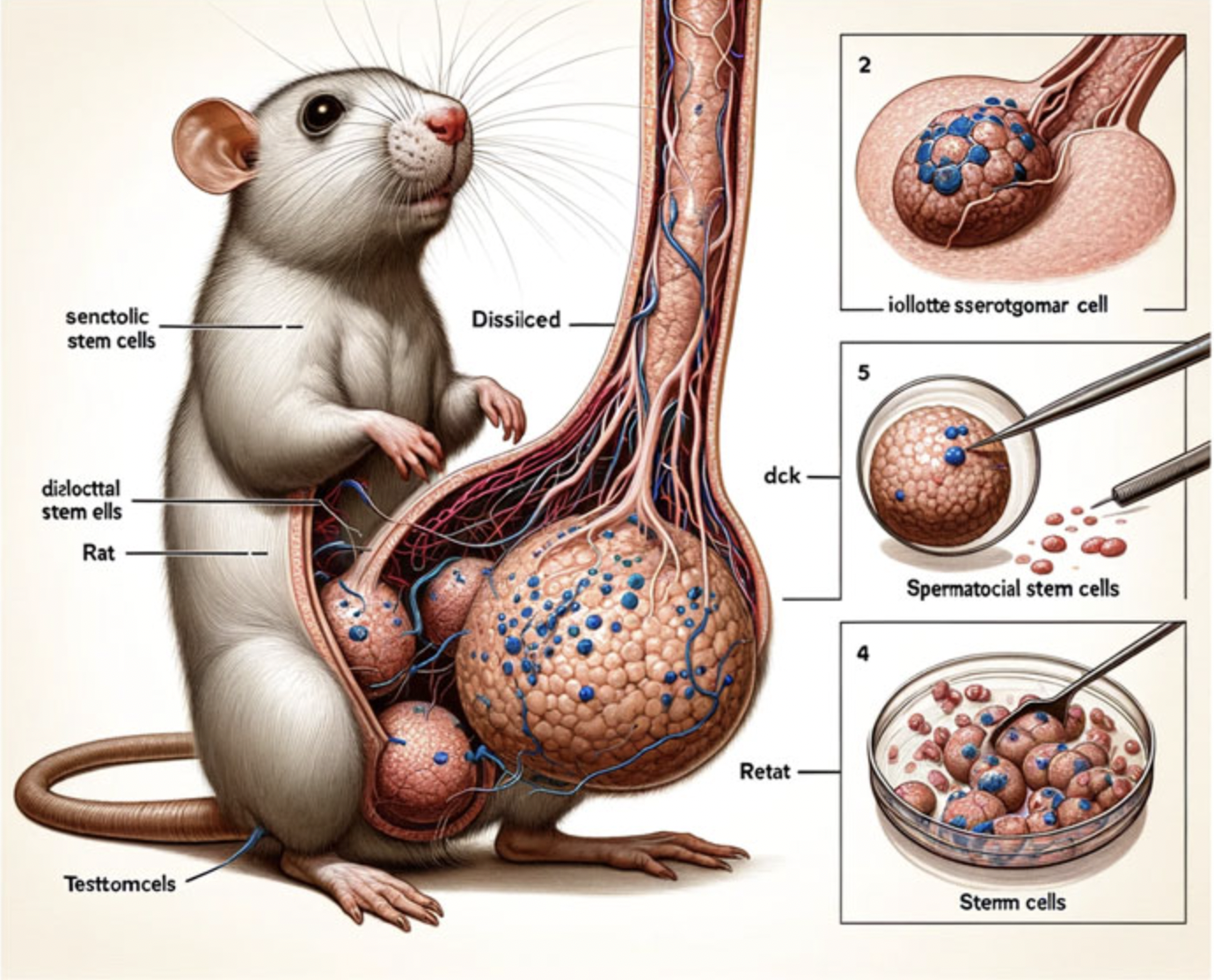

One figure is a diagram of a dissected rat penis, and although a textual description will not do it justice, it looks like the rat’s penis is more than double the size of its body and has all the hallmarks of janky AI generation, including garbled text. Labels in the diagram include “iollotte sserotgomar cell,” “testtomcels,” and “dck,” though the AI program at least got the label “rat” right. The paper credits the images to Midjourney, a popular generative AI tool.

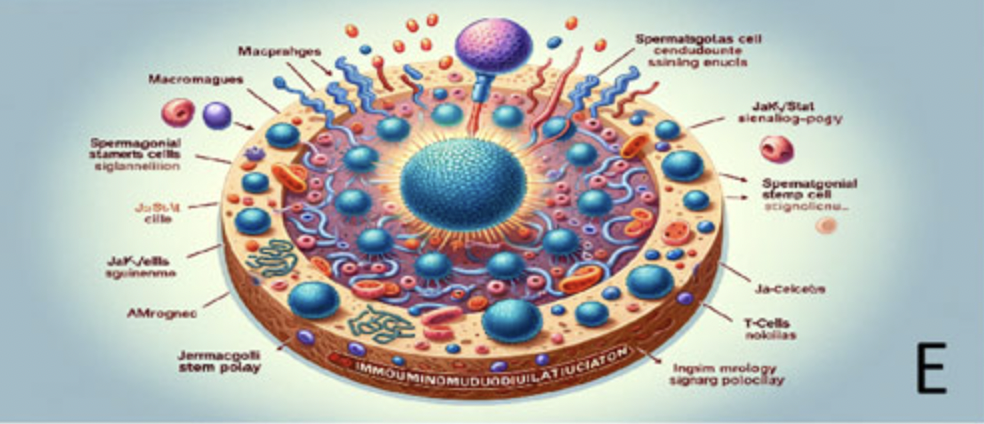

Other AI-generated figures in the paper contain similarly abundant textual and visual nonsense, including cell diagrams that look a lot more like alien pizzas with labels to match.

It’s unclear how this all got through the editing, peer review, and publishing process. Motherboard contacted the paper’s U.S.-based reviewer, Jingbo Dai of Northwestern University, who said that it was not his responsibility to vet the obviously incorrect images. (The second reviewer is based in India.)

“As a biomedical researcher, I only review the paper based on its scientific aspects. For the AI-generated figures, since the author cited Midjourney, it's the publisher's responsibility to make the decision,” Dai said. “You should contact Frontiers about their policy of AI-generated figures.”

Frontier’s policies for authors state that generative AI is allowed, but that it must be disclosed—which the paper’s authors did—and the outputs must be checked for factual accuracy. “Specifically, the author is responsible for checking the factual accuracy of any content created by the generative AI technology,” Frontier’s policy states. “This includes, but is not limited to, any quotes, citations or references. Figures produced by or edited using a generative AI technology must be checked to ensure they accurately reflect the data presented in the manuscript.”

On Thursday afternoon, after the article and its AI-generated figures circulated social media, Frontiers appended a notice to the paper saying that it had corrected the article and that a new version would appear later. It did not specify what exactly was corrected.

Frontiers did not respond to a request for comment, nor did the paper’s authors or its editor, who is listed as Arumugam Kumaresan from the National Dairy Research Institute in India.

The incident is the latest example of how generative AI has seeped into academia, a trend that is worrying to scientists and observers alike. On her personal blog, science integrity consultant Elisabeth Bik wrote that “the paper is actually a sad example of how scientific journals, editors, and peer reviewers can be naive—or possibly even in the loop—in terms of accepting and publishing AI-generated crap.”

“These figures are clearly not scientifically correct, but if such botched illustrations can pass peer review so easily, more realistic-looking AI-generated figures have likely already infiltrated the scientific literature. Generative AI will do serious harm to the quality, trustworthiness, and value of scientific papers,” Bik added.

The academic world is slowly updating its standards to reflect the new AI reality. Nature, for example, banned the use of generative AI for images and figures in articles last year, citing risks to integrity.

“As researchers, editors and publishers, we all need to know the sources of data and images, so that these can be verified as accurate and true. Existing generative AI tools do not provide access to their sources so that such verification can happen,” an editorial explaining the decision stated.